Which Of The Following Characteristics About Big Data Is Not True?

Overview:

- Acquire what is Big Data and how information technology is relevant in today's earth

- Become to know the characteristics of Big Data

Introduction

The term "Big Data" is a bit of a misnomer since it implies that pre-existing data is somehow modest (it isn't) or that the only challenge is its sheer size (size is one of them, but there are often more).

In brusk, the term Large Information applies to data that can't exist processed or analyzed using traditional processes or tools.

Increasingly, organizations today are facing more and more Big Data challenges. They have admission to a wealth of information, only they don't know how to become value out of it because it is sitting in its most raw class or in a semi-structured or unstructured format; and as a result, they don't fifty-fifty know whether it's worth keeping (or even able to go along it for that matter).

In this article, we look into the concept of large data and what it is all about.

Tabular array of Contents

- What is Big Data?

- Characteristics of Big Information

- The Volume of Data

- The Diversity of Information

- The Velocity of Data

What is Big Information

We're a part of information technology, every day!

An IBM survey found that over half of the business leaders today realize they don't have access to the insights they need to do their jobs. Companies are facing these challenges in a climate where they have the ability to store anything and they are generating data like never earlier in history; combined, this presents a real information challenge.

Information technology's a puzzler: today's business organization has more access to potential insight than ever before, notwithstanding as this potential gold mine of data piles upward, the per centum of information the business can process is going down—fast. Quite simply, the Big Information era is in full force today because the world is changing.

Through instrumentation, we're able to sense more things, and if we can sense it, nosotros tend to effort and shop it (or at to the lowest degree some of it). Through advances in communications engineering science, people and things are becoming increasingly interconnected—and not only some of the time, but all of the time. This interconnectivity rate is a delinquent train. By and large referred to as machine-to-machine (M2M), interconnectivity is responsible for double-digit year over year (YoY) data growth rates.

Finally, considering pocket-size integrated circuits are now so inexpensive, we're able to add intelligence to almost everything. Even something as mundane equally a railway car has hundreds of sensors. On a railway car, these sensors track such things every bit the atmospheric condition experienced past the runway car, the state of individual parts, and GPS-based data for shipment tracking and logistics. Later on railroad train derailments that claimed extensive losses of life, governments introduced regulations that this kind of data be stored and analyzed to prevent future disasters.

Rail cars are also condign more intelligent: processors have been added to translate sensor data on parts prone to clothing, such every bit bearings, to place parts that need repair earlier they fail and cause further damage—or worse, disaster. But information technology's non just the track cars that are intelligent—the bodily track accept sensors every few feet. What's more, the data storage requirements are for the whole ecosystem: cars, rails, railroad crossing sensors, weather condition patterns that cause track movements, and and so on.

Now add together this to tracking a rail car'south cargo load, arrival and departure times, and y'all tin can very speedily meet you've got a Big Data problem on your easily. Fifty-fifty if every chip of this data was relational (and it's not), it is all going to be raw and have very different formats, which makes processing it in a traditional relational system impractical or impossible. Runway cars are simply i case, but everywhere we expect, we see domains with velocity, volume, and variety combining to create the Big Data trouble.

What are the Characteristics of Big Data?

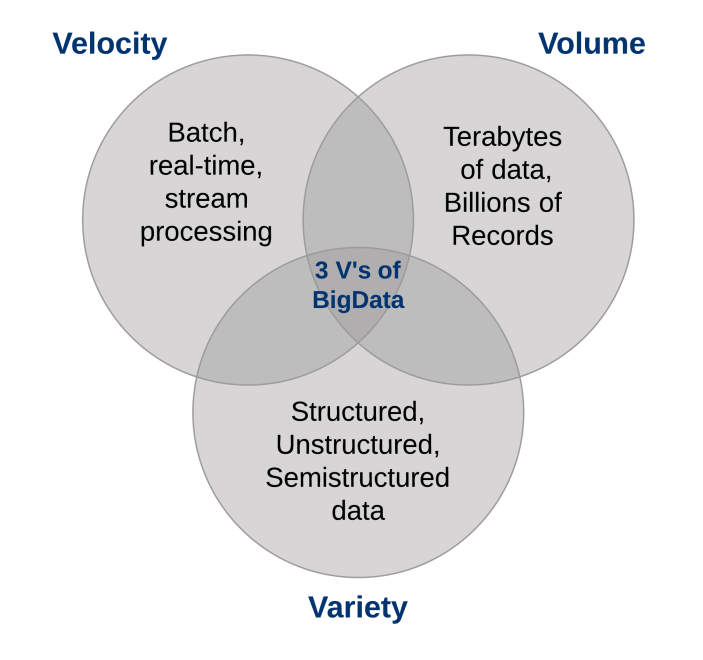

3 characteristics define Big Data: volume, variety, and velocity.

Together, these characteristics ascertain "Large Data". They have created the need for a new class of capabilities to augment the way things are done today to provide a better line of sight and command over our existing knowledge domains and the ability to human action on them.

1. The Book of Data

The sheer volume of data existence stored today is exploding. In the yr 2000, 800,000 petabytes (PB) of information were stored in the world. Of class, a lot of the data that's being created today isn't analyzed at all and that's another problem that needs to be considered. This number is expected to reach 35 zettabytes (ZB) by 2020. Twitter alone generates more 7 terabytes (TB) of data every twenty-four hours, Facebook 10 TB, and some enterprises generate terabytes of data every hour of every twenty-four hour period of the year. It's no longer unheard of for individual enterprises to have storage clusters property petabytes of data.

When you lot stop and think about information technology, it'south a little wonder nosotros're drowning in information. Nosotros store everything: ecology information, fiscal information, medical data, surveillance data, and the list goes on and on. For case, taking your smartphone out of your holster generates an event; when your commuter train's door opens for boarding, that'southward an consequence; bank check-in for a plane, badge into work, buy a song on iTunes, alter the Telly aqueduct, take an electronic toll route—every one of these deportment generates data.

Okay, you go the point: There'due south more data than always earlier and all yous have to do is look at the terabyte penetration rate for personal home computers equally the telltale sign. Nosotros used to keep a list of all the data warehouses we knew that surpassed a terabyte about a decade ago—suffice to say, things have inverse when it comes to volume.

As implied past the term "Big Data," organizations are facing massive volumes of data. Organizations that don't know how to manage this data are overwhelmed by it. But the opportunity exists, with the right engineering science platform, to clarify almost all of the data (or at least more than of it by identifying the data that's useful to you) to gain a ameliorate agreement of your business, your customers, and the marketplace. And this leads to the current conundrum facing today'southward businesses across all industries.

As the amount of data available to the enterprise is on the rise, the percentage of information it can process, understand, and analyze is on the decline, thereby creating the bullheaded zone.

What'south in that blind zone?

Yous don't know: it might be something great or peradventure nothing at all, but the "don't know" is the problem (or the opportunity, depending on how yous look at it). The conversation about data volumes has changed from terabytes to petabytes with an inevitable shift to zettabytes, and all this information can't exist stored in your traditional systems.

2. The Variety of Information

The book associated with the Big Information phenomena brings forth new challenges for data centers trying to deal with information technology: its variety.

With the explosion of sensors, and smart devices, as well every bit social collaboration technologies, information in an enterprise has become complex, because it includes not only traditional relational data, simply too raw, semi-structured, and unstructured information from web pages, weblog files (including click-stream data), search indexes, social media forums, email, documents, sensor data from active and passive systems, then on.

What's more, traditional systems can struggle to store and perform the required analytics to proceeds agreement from the contents of these logs because much of the information being generated doesn't lend itself to traditional database technologies. In my experience, although some companies are moving down the path, by and large, near are only beginning to understand the opportunities of Large Data.

Quite simply, variety represents all types of information—a fundamental shift in analysis requirements from traditional structured data to include raw, semi-structured, and unstructured data as function of the decision-making and insight procedure. Traditional analytic platforms tin't handle variety. Withal, an organization'south success will rely on its ability to draw insights from the various kinds of data available to information technology, which includes both traditional and non-traditional.

When nosotros look dorsum at our database careers, sometimes it'southward humbling to see that nosotros spent more of our time on just 20 pct of the data: the relational kind that's neatly formatted and fits ever so nicely into our strict schemas. But the truth of the affair is that 80 percent of the world's information (and more and more of this data is responsible for setting new velocity and volume records) is unstructured, or semi-structured at best. If y'all look at a Twitter feed, you'll see construction in its JSON format—but the bodily text is not structured, and understanding that tin be rewarding.

Video and moving-picture show images aren't hands or efficiently stored in a relational database, certain event data can dynamically change (such as weather patterns), which isn't well suited for strict schemas, and more. To capitalize on the Big Data opportunity, enterprises must be able to analyze all types of data, both relational and non-relational: text, sensor data, audio, video, transactional, and more than.

3. The Velocity of Data

Simply as the sheer volume and diverseness of data we collect and the store has changed, so, likewise, has the velocity at which it is generated and needs to be handled. A conventional understanding of velocity typically considers how quickly the data is arriving and stored, and its associated rates of retrieval. While managing all of that quickly is skillful—and the volumes of information that we are looking at are a issue of how quickly the information arrives.

To accommodate velocity, a new way of thinking nigh a trouble must start at the inception point of the information. Rather than confining the idea of velocity to the growth rates associated with your data repositories, we suggest you apply this definition to data in motion: The speed at which the data is flowing.

After all, we're in agreement that today's enterprises are dealing with petabytes of data instead of terabytes, and the increase in RFID sensors and other information streams has led to a constant menstruum of information at a pace that has made it impossible for traditional systems to handle. Sometimes, getting an edge over your competition can mean identifying a trend, problem, or opportunity just seconds, or even microseconds, before someone else.

In addition, more than and more of the data beingness produced today has a very short shelf-life, so organizations must be able to clarify this information in near real-time if they hope to find insights in this data. In traditional processing, you can think of running queries against relatively static data: for example, the query "Show me all people living in the ABC flood zone" would consequence in a single result set to exist used every bit a alarm list of an incoming weather condition design. With streams computing, you tin can execute a process similar to a continuous query that identifies people who are currently "in the ABC flood zones," but you get continuously updated results because location information from GPS data is refreshed in real-fourth dimension.

Dealing effectively with Big Data requires that you perform analytics against the volume and diverseness of information while it is still in motion, non just afterwards it is at remainder. Consider examples from tracking neonatal health to fiscal markets; in every example, they require handling the volume and variety of data in new ways.

End Notes

Y'all tin't afford to sift through all the data that's available to you lot in your traditional processes; it's just as well much data with as well little known value and too much of a gambled price. Big Data platforms give you a way to economically store and procedure all that data and find out what's valuable and worth exploiting. What's more, since nosotros talk most analytics for information at balance and information in motion, the actual information from which y'all can observe value is not only broader, simply you're able to utilize and analyze it more chop-chop in real-time.

I recommend you go through these articles to become acquainted with tools for big data-

- Getting Started with Apache Hive – A Must Know Tool For all Big Data and Data Applied science Professionals

- Introduction to the Hadoop Ecosystem for Big Data and Data Applied science

- PySpark for Beginners – Have your Kickoff Steps into Big Data Analytics (with Code)

Let us know your thoughts in the comments below.

Reference

Understanding Big Data: Analytics for Enterprise Form Hadoop and Streaming Data.

Which Of The Following Characteristics About Big Data Is Not True?,

Source: https://www.analyticsvidhya.com/blog/2020/11/what-is-big-data-a-quick-introduction-for-analytics-and-data-engineering-beginners/

Posted by: nancemaland.blogspot.com

0 Response to "Which Of The Following Characteristics About Big Data Is Not True?"

Post a Comment